Welcome to Pic2Map’s documentation!¶

This plugin has been developed during Gillian Milani’s master thesis at EPFL in the lASIG laboratory. The plugin development is based on the following work: Timothée Produit and Devis Tuia. An open tool to register landscape oblique images and generate their synthetic models. In Open Source Geospatial Research and Education Symposium (OGRS), 2012.

The plugin is still in its development phase and you can share your comments with us: gillian.milani at epfl.ch

Already aware of the plugin ? Go to the Tricks for a comfortable experience section !

Concepts¶

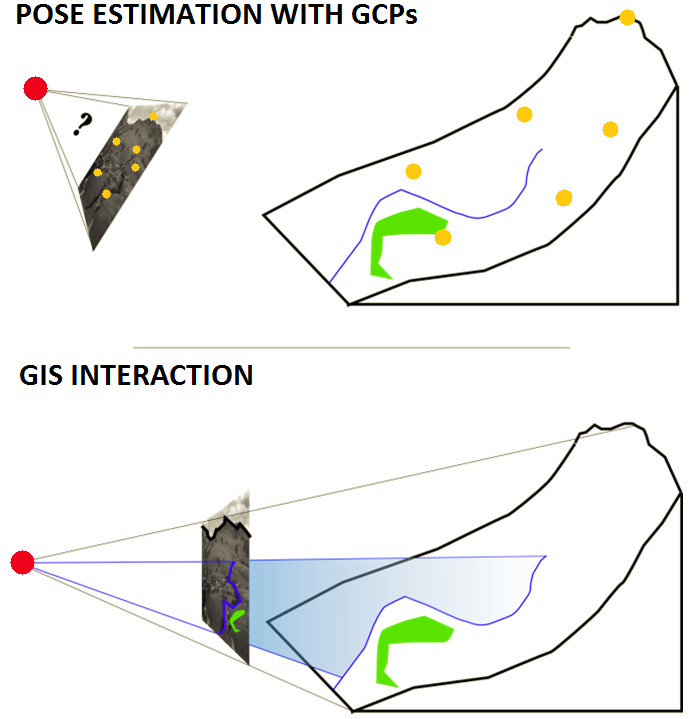

Pic2Map enables GIS functions in a picture. In essence, the plugin relate each pixel of a picture with its 3D world coordinates. To reach this goal, the position, the orientation, as well as internal parameters of the camera need to be determined from ground control points (GCPs). These parameters are used to orient the picture with the corresponding Digital Elevation Model (DEM).

The Pic2Map plugin brings more than new capabilities in QGIS, it brings also new people! Consider using this tool if your deal with one of the following situations:

- Creation of orthorectified raster from oblique photographs

- Determination of the orientation of a single camera

- Rapid mapping after disaster such as avalanche or landslide

- Recursive mapping, such as map of snow melting or traffic jams

- Mapping from terrestrial images

- Change detection from terrestrial images

- Augmentation of landscape pictures with geographic vector datasets

The steps to use Pic2Map are the following:

- Load a landscape picture with a corresponding DEM

- Determine the pose of the camera by providing GCP (or navigation in virtual view)

- Use the "monoplotter" to project vector data in the photograph or to project the photograph on the map.

Installation and testing dataset¶

You can download the plugin directly from the QGIS plugin repository.

Go to Plugins ‣ Manage ans install plugins... ‣ settings Tab ‣ Enable experimental plugin... and add the repository url. Then, you can enable the plugin by searching the plugin list.

A technical introduction video can be found here .The original plugin folder contains a data set for testing the plugin. Extract the data outside the original folder (for example on the desktop) for being able to use them.

System requirement¶

The plugin was tested with QGIS 2.2 to 2.8

OpenGL 3.0 is required for Framebuffer support. If Framebuffer is not supported, some tool capabilities are restricted.

You may have problem with Opengl.GL under Ubuntu. In this case, use the following commands and try again:

- sudo easy_install pyopengl

- sudo apt-get install python python-opengl python-qt4 python-qt4-gl

Data requirement¶

To use this plugin, you will need:

- An oblique picture (png, jpeg, etc.)

- A DEM in tiff format (A 3D model can not be generated from a single image)

- An orthoimage (to digitize GCP and render 3D images)

The DEM has to be consistent with the picture. To avoid bugs and have a decent processing time crop the DEM to area visible in the picture. The speed of the plugin depends on the size of DEM, so try to balance between performance and resolution. Tif files with 1000 x 1000 pixels can be considered as a limit for a comfortable work.

If you don’t have a DEM, follow these steps:

- Go on http://earthexplorer.usgs.gov/ and download a tile of ASTER or SRTM

- Check which UTM zone you need on wikipedia

- Use the tool Raster ‣ Projections ‣ Warp and reproject it from WGS84 (EPSG:4326) the to the ETRS89 / UTM spatial reference system.

- Use the tool Raster ‣ Extraction ‣ Clipper for taking only the area that you need.

- Incidentally use the tool Raster ‣ Conversion ‣ Translate for rescaling your DEM or ortho-image

However, prefer a DEM with a better resolution, if you need a good accuracy.

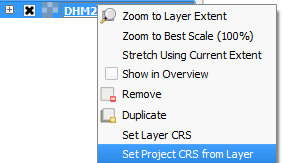

Initialization¶

Required data are a landscape picture and a DEM. You can optionally provide an orthoimage. The orthoimage must have the same SRS, even if they don’t need to superimpose exactly. If you choose to use an ortho-image, the 3D view will appears black outside the bounding box of the orthoimage. If you prefer working with shaded DEM, don’t use ortho-image. Moreover, your orthoimage must not be heavier than few Mb. After loading the data, check that the Project SRS is the same as your DEM projection system. If not or if you’re not sure, right click on the DEM layer and set the project SRS with DEM SRS. After adding layers from the openLayers plugin, you have to reset the project SRS from the DEM.

Pose estimation¶

The pose estimation computes the orientation and position of the camera. Moreover, the focal need to be estimated. The central point is fixed to the center of the picture. These two last are internal camera parameters. There are two approaches for the pose estimation. Either you click on some point features present in the picture and in the canvas (the GCPs), either you opt for a more “gaming” approach, called here Virtual 3D.

| GCP Approach | Virtual 3D Approach | |

| Advantages | Some known parameter can be fixed The result is mathematically rigorous The focal is usually well estimated |

An acceptable estimation can be achieved rapidly The method is simple and clear for everyone The horizon line can be precisely adjusted |

| Disadvantages | Precision is related to the number of GCP Precision is related to the quality of GCP Digitalization of GCP is time consuming |

Pose estimate usually is not accurate The focal is not easily determined We do not have directly a measure of the precision |

It is possible to mix both approaches:

- Start with virtual 3D approach and save the pose in KML (or use Google Earth)

- Restart with GCP approach, load the KML pose

- Use the 3D view at the current pose for GCP digitalization

GCP approach¶

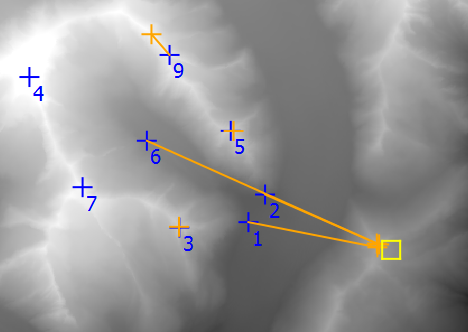

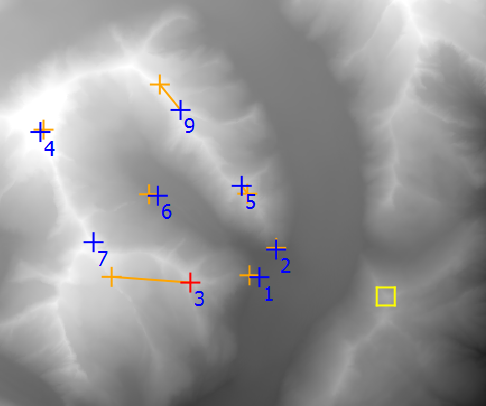

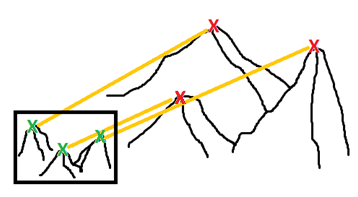

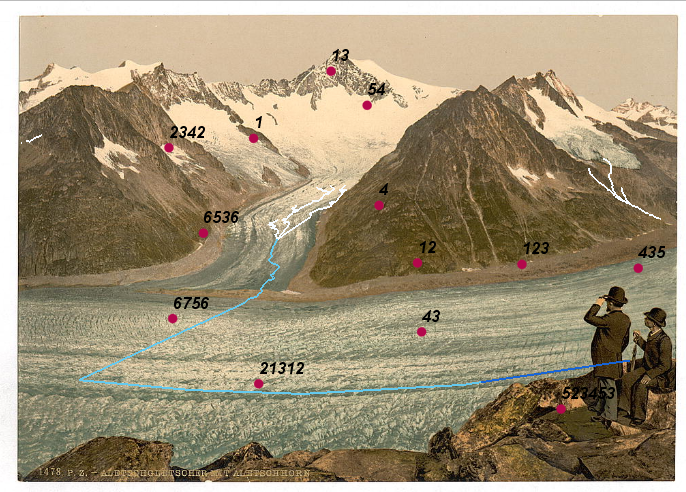

The pose can be estimated from 2D-3D correspondences detected in the picture and on the GIS. The correspondences are called Ground Control Point (GCP). Each GCP helps to gain precision for the pose estimation. A minimum of 6 points is required to solve the unknowns. However, it is recommended to use more than 6 GCPs, to gain accuracy and detect errors. The 3D viewer can ease the recognition of GCPs.

We make use standard computer vision and photogrammetry algorithms for the pose estimation. 2D points in the picture have to be linked to 3D coordinates in world (or local) coordinate system. Direct Linear Transform (DLT) algorithm is used for estimation of the position. Several parameters can be fixed trough a least square refinement of the DLT.

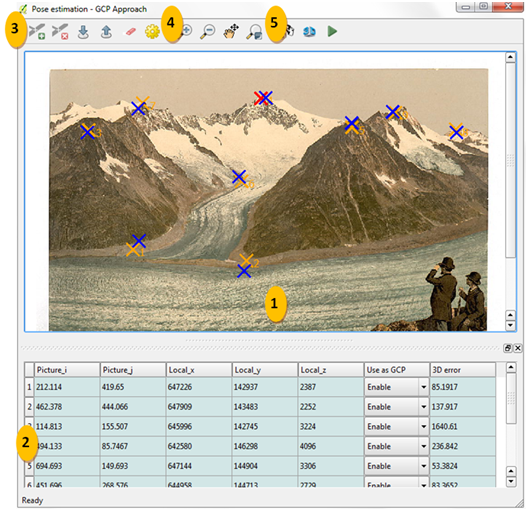

The GCP digitalization interface is divided in five main areas:

- The scene

- The GCP table

- The GCP toolbar

- The scene toolbar

- The 3D toolbar

The Scene¶

It is a view of the picture. You select a GCP in the table and then click on the scene for getting the picture coordinates. The scene toolbar (4) helps you to navigate inside the picture and the canvas.

The GCP table¶

The two first columns are the 2D picture coordinates. The three following are the world coordinates (East, Nord and altitude). To fill the table, select a line and click on the scene to get image coordinates. Click on its correspondence in the QGis canvas for the world coordinates. There is also a ways to get 3D coordinates from the 3D viewer by ctrl + Left Click: . In fact, this last option is recommended

The fifth one is a column of GCP state. You can disable the GCP for the pose estimation. A projection will be done, even if it’s not used by the algorithm. The last column indicates the error after the pose estimation. It is the 3D distance measured between the 3D coordinate and the projection of the picture GCP on the DEM.

The GCP Toolbar¶

Create a new line in the GCP table

Create a new line in the GCP table

Delete the selected line in the GCP table

Delete the selected line in the GCP table

The scene toolbar¶

The 3D toolbar¶

Open the pose estimation dialog box

Open the pose estimation dialog box

Close the GCP digitalization window and open the monoplotter

Close the GCP digitalization window and open the monoplotter

Show information contain in EXIF

Show information contain in EXIF

Save current pose estimation in KML file

Save current pose estimation in KML file

Load a pose estimation from KML file

Load a pose estimation from KML file

Information about the motion in 3D viewer:

- Left mouse button pressed: move left-right an up-down

- Right mouse button pressed; rotate on the tilt and azimuth

- Wheel: go front and back (Press wheel for a finer placement)

Information about the capabilites in 3D viewer:

- ctrl + Left Click: get 3D coordinates for selected GCP

- alt + Left Click: fix the position of the camera on the DEM at the clicked position

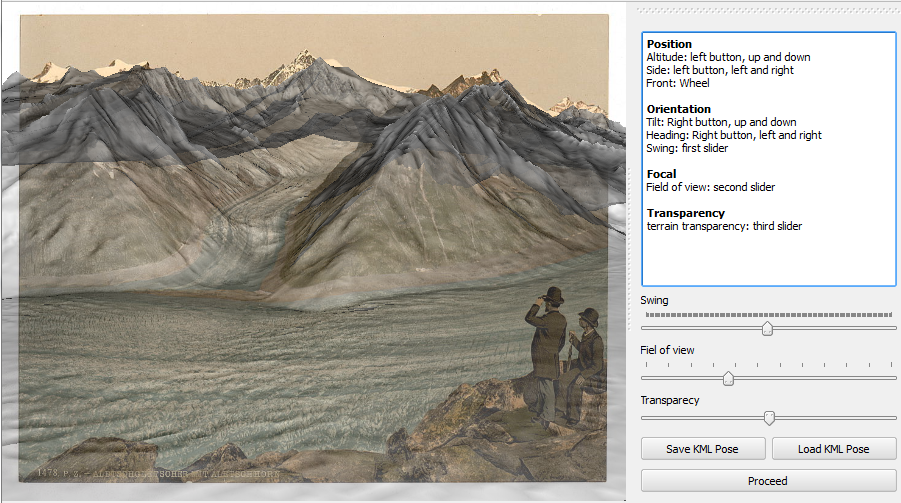

Virtual 3D approach¶

We make use of the ability of users to estimate position, orientation as well as the focal of the camera by navigating in the virtual landscape. The pose estimation is done empirically. The camera travels in the virtua view to find a location, orientation and focal which match the picture. You can save the pose in KML file and open it directly on google earth. It’s also possible to load pose of picture from google earth. This technique should be prefered to get an initial pose which will be refined with GCPs.

Monoplotter¶

Once the pose is set, you can launch the monoplotter. The coordinates of the pixels is obtained by projecting them on the DEM. If you have bad quality DEM or bad pose estimation, the accuracy will be affected.

Visualization of layers¶

For visualizing layers in your picture, just add them to the canvas and press “refresh layers”. It is currently possible to work on points and polylines. Polygons have not been yet integrated in the plugin. The symbology will be the same as the one used in the canvas. You can project simple, categorized or graduated symbology. However, you cannot use complex symbology, like point represented by stars or double lines representations. Labels present in the canvas will be also drawn in the monoplotter. In this case, the font is not taken from the canvas. You can press on “label settings” for controlling label appearance.

Digitalization¶

The synergy with the canvas is quite well done. For digitalizing a new feature, make sure that the layer is in editing mode and the “new feature” button is toggled.

Then you can use ctrl + Left Click on the monoplotter and digitize new features. The precision of digitalization will depend on the quality of the DEM and on the quality of the pose estimation.

Measurements¶

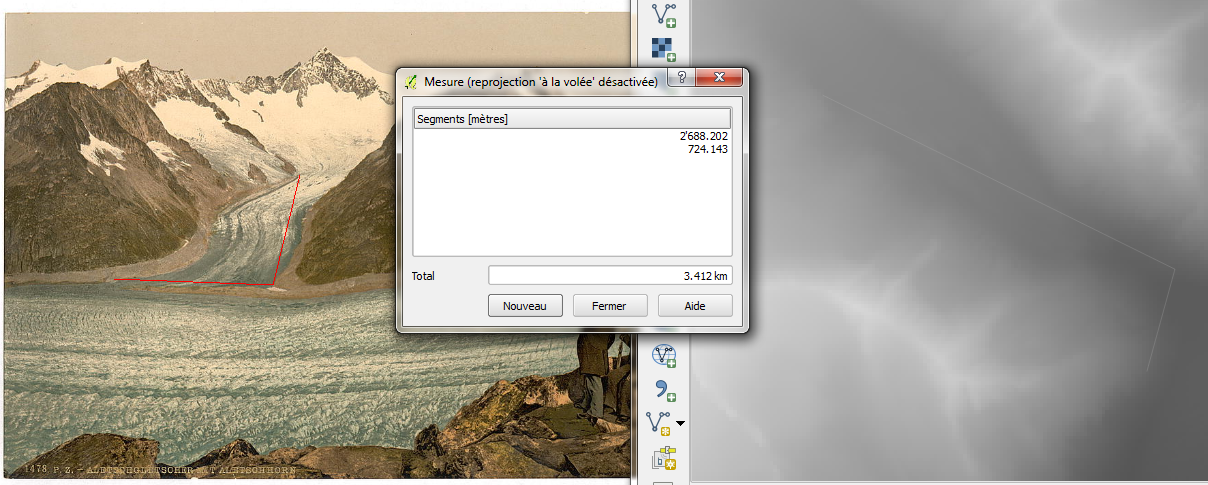

There are two different tools for measuring. The “Measure on plane” button opens the standard QGIS measure tool and allow its use in the monoplotter. The second “Measure 3D” button opens an independent which act directly on the DEM.

Measure on plane You can measure in the monoplotter as you would measure on the canvas by ctrl + Left Click. The measurements are done as if you would click in the canvas at the projected location.

Measure 3D If you want to take into account the altitude, you can opt for the 3D measurement tool. With the 3D measurement tool, measures are done directly with the 3D coordinates from the DEM.

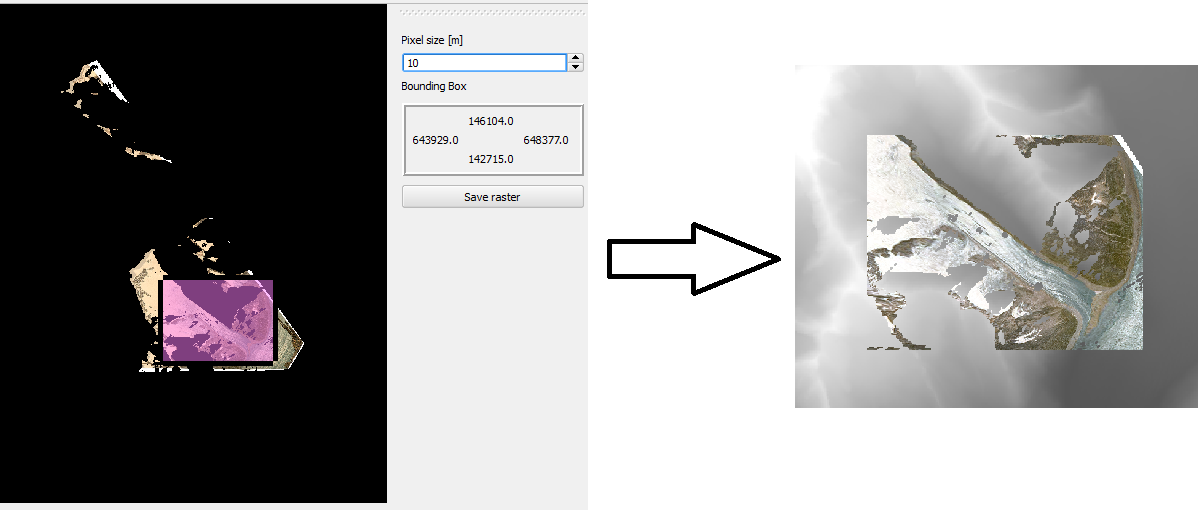

Ortho rectification (Draping)¶

By draping is understood the coloring of each DEM profil with the picture data. The process is quite similar to a standard ortho rectification. For getting a georeferenced raster from your picture, just click on “Drape on DEM”, and then choose an area on the preview window. Pull the mouse from up-left to the down-right direction. The pixel size can also be set. Some problems can arise if the resolution is too small. Keep well designed value, in order of the resolution of your DEM.

You can save the raster in two format. Either in png, which will create an standard image, displayable from every image software. Either you create a tiff raster, which will be geo-referenced and that you can open with your favorite GIS software. The forth band of the geotiff raster can be used as transparency band.

The process used for draping the DEM provide high quality results. It uses the best OpenGL features for texturing heightmap with every detail of the picture. If you are interested in the detail of the draping, you can consult documentation of openGL about texturing.

Tricks for a comfortable experience¶

- Don’t forget to work always with the SRS of your DEM!

About the DEM, orthoimage and picture:

- If you’re not used to GIS world, get some help for getting a nice DEM. The four point in “Data requirement” can look simple, but troubles come pretty fast.

- Don’t use a DEM with more than 1‘000‘000 pixels if high rate calculations are needed.

- If you can get a better DEM than Aster or SRTM; don’t hesitate, it’s worth in most cases.

- The plugin will tend to crash if you use large images (> 10Mb). This is due to limitation on GPU for buffering (generally in scale of the screen wide)

- If you have to use a large DEM, use an orthoimage if the initial loading is too slow. This last step would be skipped (calculation of shadow of DEM)

About the pose estimation:

- For getting GCP in world coordinates, try to use the 3D viewer first, it is much easier than on the orthogonal view.

- Try to get GCP in every part of the picture. Then you can refine them by using OpenLayers plugin for example.

- The best configuration for the GCPs is a cube. Don’t hesitate to put GCP at the bottom of mountain, not only at the top.

- If you only know the approximate region where the picture was shot, but want to use Pic2Map, you can navigate in virtual globe (WorldWind or Google Earth for example) to find it !

- Check the pose estimation by opening the 3D viewer. It gets initialized at the current pose estimate when opened.

- Below are presented particular cases of reprojection of GCP. It is usual to fall in one of these cases.

About the monoplotter:

- Depending on the number of layer in the canvas, the refreshment of the monoplotter can take some times. You will not have any problems if the features are away from your DEM (they become clipped as well as features behind the camera).

- Polygons are not yet supported by the monoplotter.

- Don’t try to use complex symbology.

- During the draping, don’t choose a too small resolution. It may not be supported if the ratio - (#pixels in DEM * DEM resolution) / resolution of orthoimage - is too high.

- The measure tool doesn’t measure in the picture directly. It projects the click of the mouse in the canvas. So the measure is, in reality, done in the canvas. This implies a strange behavior: if you want to do precise measurement in the picture, you have to zoom at the right place in the canvas.

- The same as previous point is worth for digitalization of new features.

Technical documentation¶

A more detailed documentation with equations can be asked to gillian.milani@epfl.ch

Pose estimation¶

In this section will be presented the algorithms used for the pose estimation using GCP. In brief, the Direct Linear Transform (DLT) algorithm is used for the initial guess of the pose. Then a least square algorithm is used for the purpose of fixing known parameters and refining.

The DLT algorithm give H, the homographic projection in U = H * X where U is a pixel coordinates, X a coordinates in 3D space. The first challenge is therefore to implement the DLT, which is not so hard. Then, a more complex question is to get the projections parameters from H. The rotation matrix is not trivial to extract. The position is also related to the rotation estimates. The extraction of the rotation matrix can change radically with few changed in GCP positions. A first estimate of rotation matrix is extracted according to the DLT equations. Then a cholesky factorization is applied in order to shape the estimate in a real rotation matrix

According to the co-linearity equation, parameters are the position (x,y,z), the orientation (t,h,s) the focal (f) and the central point (u0, v0). For the work with openGL, it was sensefull to fix the u0 and v0 at 0. This is the reason of applying the least square algorithm even when the user doesn’t fix some parameters. Or in other words, the central point is always fixed to zero after the DLT calculation.

The least square variant implemented is the algorithm of Levenberg-Marquardt. With such an implementation, we raise the stability of the least square approach, which is very unstable in our case due to the high non-linearity of the co-linearity equation. On the other hands, we keep high performances compared to a standard gradient approach since some parameters, as the rotation, can change quite a lot between the first DLT estimate and the least square results.

QGis Interactions¶

Under the principal of extension, a plugin has to reuse as much as possible tools already available in the software. The available modules of QGis have been mainly helpful in the case of layers visualization and layer digitalization.

In the first case, a loop crosses all symbology layers and store information about width, color, categories and graduation. Then a second loop crosses the layer and tests if a feature is visible or not. If it is visible, it keeps trace of the symbology in an index array. In the painting loop, each feature is drawn according to the geometry stored in an array and the symbology is selected according to the index array.

When a new feature is created in the picture, the feature is not directly created according to the projection. A virtual click is created on the canvas at the projected coordinates. With such a virtual click, it is guaranteed to get the same behavior as if the click would be done directly on the canvas.

The measure tool is currently affected by some implementation difficulties.

OpenGL Integration¶

OpenGL is the central trunk of the plugin. Here are some explanations about what happens in reality in the monoplotter. It will be clear as spring water if you have already used the 3D approach for pose estimation.

In the monoplotter, the picture is drawn in the background. Then the DEM is created exactly as in the 3D viewer. The camera is initialized at the previous pose estimate. Finally the DEM is set as completely transparent. So when you digitalize new features or do some measurements, you think you’re clicking on the picture, but in fact, you’re clicking on the DEM which is transparent in front of the picture!

If you are interested in the creating of 3D view with DEM, the code is open source and you can enjoy it. If you find the code harsh to read, you can send me an email.

The first progress bar, after the initialization, taks time for normal calculation, in case you don’t have an orthoimage. We need to calculate the normals for the purposes of shading the DEM. We could use a standard tool of qgis (gdal) for shaded DEM, But knowing the focal allows to enable lightning in opengl. The shadows move therefore with the camera, which ensure a good enlightenment wherever is the pose estimate.

Draping¶

In brief, you give a picture coordinates at each DEM Vertex. The DEM triangles formed by three vertices is filled with a stretched image given the coordinates of the vertex. So it is possible to get a better color resolution than the DEM resolution.